Contributions from Visitor Research to CI and ICT4D Theory and Research Methodology

Trish Alexander, School of Computing,

University of South Africa. Email:

[email protected]

Helene Gelderblom, Department of

Informatics, University of Pretoria. Email:

[email protected]

Estelle De Kock, School of Computing,

University of South Africa. Email: [email protected]

INTRODUCTION

Case Study Context

This paper reflects on the research methodology and associated research methods used to gain a comprehensive understanding of the level of interaction and engagement of visitors with a biotechnology exhibit at five science centres in South Africa. This case is used to illustrate similarities between Community Informatics, ICT for Development and Visitor Research and how research focussing on the free-choice or self-directed learning that takes place at science centres can possibly also be useful within Community Informatics.

The exhibit which was studied uses video, audio and a touch sensitive screen to provide information about biotechnology, associated topics and careers. This is in line with the objective of the science centres to provide non-formal science education by discovery learning and using interactive, living science and technology. The centres strive to provide imaginative, enjoyable, hands-on learning experiences to the public in general and the youth in particular. This is similar to the goals of science centres throughout the world:

"the informal setting of the science center is a rich learning environment that nurtures curiosity, improves motivation and attitudes toward science, engages the visitors through participation and social interaction and generates excitement and enthusiasm, all of which are conducive to science learning and understanding" (Barriault and Pearson 2010, 91)

The five centres are located in three noticeably different kinds of environment. Two are in large cities both of which are major tourist destinations. They are easily accessed and independently located - they are not part of premises owned by a sponsor or other organisation although the one is in a large shopping mall. Two other centres are located on university campuses, one of which is a well-resourced university in a city and the other a less well-established university in a rural area. The fifth centre is considerably less ambitious in terms of numbers of exhibits and is located in a classroom (science laboratory) at a secondary school in a high density, low socio-economic residential area.

All of the centres have frequent, scheduled visits from school groups with learners from preschool to those in tertiary education. These make up the majority of the visitors for all the centres. Two centres (one university campus and the school) are located so as to cater predominantly to less advantaged citizens. Both are visited almost exclusively by organised school or university groups although efforts have been made to reach out to the surrounding community as well. The remaining centres are in areas that are easily accessed, and hence are visited by large numbers of tourists, family groups, and self-motivated individuals as well as the previously mentioned school groups.

The research authority (the sponsor) who commissioned the research is also involved with the development and deployment of the exhibit and with the science centres. The tender document gave directives regarding the research methodology to be used: data should be collected using at least fifty questionnaires or interviews at each centre and the interns at the centres should collect the data. The brief clearly explained that independent research of a high standard was required. However, the independent evaluators (the authors) noted that using untrained, unsupervised and relatively junior staff members to collect data is risky and quoted literature that says that limited data can be collected from visitors to museums using questionnaires and even exit interviews (Allen 2004). Hence they proposed that quantitative and qualitative data should be collected using questionnaires and exit interviews but these should be supplemented by interviews with senior staff at the centres and by systematically and carefully observing visitors using the exhibit.

Research Areas

In order to decide which research traditions and paradigm should be used it is important to examine the research topic and to decide which research discipline it fits within. The research described here can be considered to be ICT for development (ICT4D) firstly, as South Africa is accepted as being an emerging economy or developing country and is a highly unequal society (has the highest Gini coefficient in the world 63.1 (data.worldbank.org › Indicators)); and secondly, one of the most evident aims of the science centres is to provide support to schools, educators and communities from less advantaged areas and hence has development as a goal.

ICT4D, also known rather less commonly as Development Informatics (Heeks 2014), is clearly not synonymous with Community Informatics but there is a noticeable overlap between them when the community involved is socio-economical disadvantaged and benefits from the project which either introduces ICT or looks at the impact of previously introduced ICT on the community (De Moor 2009a; Turpin, Alexander, and Phahlamohlaka 2013; Stillman and Linger 2009). The communities being served in the case which this paper studies are: firstly, a well-defined and coherent community of practice - those who want to promote Science, Technology, Engineering, Environmental Studies and Mathematics (STEEM) as disciplines and potential careers; and secondly, visitors from far more inclusive, place-based communities located relatively close to the science centre. Each of these communities has an identity (which may be fairly consistent with those of individual community members or reflects diversity). All of the visitor communities have a high proportion of young people, whose knowledge of STEEM and interested in related careers can be stimulated by participation in informal and enjoyable learning experiences. Although not all community members are socio-economically disadvantaged, a high proportion of the groups visiting the centre are from under-resourced schools and the educational goals of science centres fit well with development.

Having established that this project fits into both ICT4D and CI, we look at a third sub-discipline known as Visitor Research, which focusses specifically on the people who visit museums (in particular science museums), science centres, zoos and aquariums, and the many other types of place-based venues ("designed spaces") intended for free-choice, but nevertheless quite focussed, learning. This type of educational research often refers to the community in which the centre is located (Rennie et al. 2010; Falk and Needham 2011), the personal, socio-cultural and physical contexts within which learning will occur (Falk and Storksdieck 2005) and the impact of the centre on less advantaged members of the community (Falk and Needham 2011). Many of the exhibits in science centres utilise information and communication technologies in some way (Salgado 2013) (this will be elaborated on further in the Literature Review) and hence the overlaps between Visitor Research and both ICT4D and CI can be established. The inclusion of this sub-discipline is the unusual aspect of the research presented here as it proposes a deviation from CI and ICT4D research, neither of which is necessarily linked to a physical place of limited size, and both of which emphasize the communications aspect of ICT. In contrast, Visitor Research uses the Internet and mobile communication only to a limited extent, for example, within the building or campus as a means to guide a visitor through exhibits (Moussouri and Roussos 2013; Hornecker and Stifter 2006). There is some similarity between this type of research and CI or ICT4D research into telecentres or multipurpose community centres as the idea of free-choice learning resonates with constructivist learning used by both CI and ICT4D in those and other environments.

This Paper

This paper focuses on the research approach used in the case study and not on the results of the investigation into the use of the exhibit. It considers the research context, the stakeholders, and the contribution to knowledge of the case study to the sub-disciplines of ICT4D, CI and Visitor Research rather than the conclusions arrived at regarding the use of the exhibit. It will however use data collected and the example of the evaluation of the exhibit in the five different contexts to illustrate the need for that research approach. The structure of the paper is as follows: The objectives are explained using a description of the problem and then formalising this as research questions; the research methodology of this paper (not of the case being studied) is stated briefly; a literature review follows which focusses on Visitor Research, related theory and models, and data collection methods - some examples of technology for free-choice use of ICT by disadvantaged communities located in public spaces other than museums and science centres are also described; in the Findings the case being studied is presented and this discussed in terms of the literature in a separate section. Finally the Conclusion presents the argument that Visitor Research and its associated theory and methods can contribute to the insights of researchers in CI and ICT4D into development, communities and context.

OBJECTIVES

Problem Statement

Within the relatively established field of Information Systems (IS) the use of theory is encouraged but there has been an on-going concern related to the development of native theories. Straub (2012) refutes that this contribution is insufficient by providing quite an impressive list of native IS theories. It is partly for this reason that a suggestion has been made that Community Informatics, which is considered to need "a stronger conceptual and theoretical base" (Stillman and Linger 2009), might align itself with IS to some extent in terms of a framework. This view of a lack of use of theory in CI is shared by Stoecker (2005) and Gurstein (2007). Gurstein (2007) says that CI research is practice oriented and hence not driven by theory or method and he points out that there is a lack of a generally accepted set of concepts, definitions or even a common understanding of what CI is. He adds that some people consider CI itself to be a methodology of Community Development that happens to use ICT as a primary means to facilitate community communications. However, just as is the case where claims regarding a lack of native IS are questioned, in CI the lack of theory can also be disputed although theories which originated in other disciplines are mostly used. There are many strong discussions related to social capital, underpinned by Bourdieu (for example used by Kvasny (2006) to support Social Reproduction Theory). Goodwin (2012) makes a powerful case for the value of Discourse Theory in CI. Social Network Theory and associated work by Wellman is used (Williams and Durrance 2008). Orlikowski's structurational model of technology and structuration theory (Giddens) remain important in CI (Pang, Lee-San 2010; Stillman and Stoecker 2005).

However the concern goes beyond the use of theory as there are also disagreements about practical aspects of research (methodological techniques and practices). For example, there is disagreement regarding whether the large number of case studies in CI research is good, with some seeing this as an opportunity for the practitioners and scholars to collaborate (Williams and Durrance 2008), others welcoming the opportunity this provides for "rich, 'lived' stories about authentic information and communication requirements, rather than the more abstract 'user' requirements often elicited in classical IS development projects" (De Moor 2009a, 5) and a contrasting opinion that the case studies are often purely anecdotal, and lack rigor (Stoecker 2005).

Action Research is also a common CI research approach (Gurstein 2007; De Moor 2009b; Stillman and Linger 2009) and the apparent understanding that CI is meant to address community change, with the community actively participating, implies that this Action Research is in fact Participative Action Research (Stillman and Denison 2014).

There have been various attempts previously to strengthen CI methodology as explained by De Moor (2009a, 1),

"... my aim was to identify some underlying methodological strands that, when woven together, could help to strengthen the fields of community and development informatics in terms of coherence, generalizability and reusability of research ideas and the practical impact of their implementation."

A very similar concern regarding the academic standing of ICT4D has been voiced by members of that research community, namely that, unfortunately, there is little evidence of researchers building on one another's work (Best 2010; Heeks 2007); there are no standardized methodologies or even agreement on how research quality can be ensured (Burrell and Toyama 2009); and there is a tendency to prioritize action over knowledge with few authors contributing to theory building (Heeks 2007; Walsham and Sahay 2006; Walsham 2013).

This paper is not proposing one particular "solution" to the problem but to look at possibly useful theories and associated methodology from a branch of educational research in order to add to the rich traditions of theory and practice already emerging in the related disciplines of CI, ICT4D and IS.

Research Question

How can theories from Visitor Research add to CI and ICT4D theories and associated methodology?

RESEARCH METHODOLOGY

The research methodology used in this paper is an explanatory case study: the case studied is a contract research project to evaluate a biotechnology exhibit in five contexts. The research process is described and reflected on, and the Contextual Model of Learning is used in order to explain whether the evaluation was effective and why. Hence, we are not reporting on the outcomes of that empirical research but are using it as an illustrative example focussing on the research process used and its effect. The evaluation is fairly typical of Visitor Research, which is being proposed as a topic or stream of both ICT4D and CI research (while also remaining a topic in Science Education).

LITERATURE REVIEW

Visitor Research

As explained in the introduction, science centres strive to increase the visitors' interest, curiosity and attentiveness to science as well as to increase their factual knowledge and to inspire them to extend their science learning and even eventually to choose a career related to science (Falk and Needham 2011). Visitor research is in many respects educational research and since constructivist and socio-cultural theoretical frameworks are becoming dominant in education research generally these are also favoured in research involving technology-enhanced learning (Kaptelinin 2011) and when studying free-choice learning during a science centre visit (Barriault and Pearson 2010). Since a science centre is one of many types of community information hub, visitor research fits well with Community Informatics research much of which uses these same theoretical frameworks.

Visitor research is interested in finding out what intrinsic factors, such as identity (Falk 2011; Werner, Hayward, and Larouche 2014) and extrinsic factors (including context), motivate and promote interaction and self-directed learning by people visiting the centres (Falk and Storksdieck 2005; RSA DST 2005; Moussouri and Roussos 2013).

The Informal Science Education (ISE) program at the National Science Foundation (NSF) (USA) promotes ISE project evaluation and believes that attitudes and practices regarding evaluation have changed dramatically over the last approximately twenty-five years (Allen et al. 2008). In addition, assessing the impact of a particular exhibit or group of exhibits or the science centre as a whole is frequently undertaken in order to satisfy funders that their investment is having the desired learning outcomes or at least to show that visitors have positive experiences (Barriault and Pearson 2010), and there are many examples of resultant reports (Falk, Needham, and Dierking 2014; Schmitt et al. 2010; Horn et al. 2008). Impact studies are difficult and expensive and hence particular strategies and even tools have been developed, namely the Framework for Evaluating Impacts of Informal Science Education Projects (Allen et al. 2008) and the Visitor Engagement Framework (Barriault and Pearson 2010). A detailed discussion of these different tools is beyond the scope of this paper but evaluation has many aspects other than gains in knowledge of concepts. It includes (all related to STEM concepts, processes, or careers):- Attitude (towards) STEM-related topic or capabilities

- Awareness, knowledge or understanding

- Engagement or interest

- Behavior

- Skills (Allen et al. 2008)

Some of these tools and guidelines have theoretical bases such as the Contextual Model of Learning (Falk, Randol, and Dierking 2012; Falk and Storksdieck 2005) and a subsequent Identity-related Visitor Motivation Model (Falk 2011). The Visitor Engagement and Exhibit Assessment Model (VEEAM) underlies the Visitor Engagement Framework (Barriault and Pearson 2010).

Information and Communication Technologies (ICT) in Science Centres

One strategy used to achieve the goals of science centres is the use of Information and Communication Technologies (ICT), which have the potential to assist such centres in achieving their goals (Falk and Dierking 2008 cited by Kaptelinin 2011; Quistgaard and Kahr-Højland 2010; Hall and Bannon 2005; Clarke 2013). The design, development and deployment of an information technology-enhanced exhibit in a science centre is a particular example of a CI artefact as it is the use of ICT by a science centre to add to the learning that occurs there.

The results are, however, frequently not as good as expected (Kaptelinin 2011). Hence the afore-mentioned models are used to evaluate not only tangible exhibits but also the increasingly frequent exhibits that use interactive multimedia to simulate scientific phenomena (Londhe et al. 2010). Kaptelinin (2011) calls this use of ICT "technological support for meaning making". Digital (interactive multimedia) exhibits can stand alone or be Web applications accessed via mobile technology (Hornecker 2008; Hsi 2002; Hornecker and Stifter 2006).

Assessment of actual use of the artefact by end users (including evidence of uptake) and reasons for this is the primary goal of Visitor Research. Sufficient actual demand for and use of the artefact underpins its viability in the long term (sustainability of associated projects) and its scalability (roll out to an increasing number of sites). Self-reported scales of Attitude and Behavioural Intention to Use cannot measure this adequately. Consequently there is interest within the Visitor Research stream in the design features of these interactive multimedia exhibits which attract visitors and assist them in meaning-making (Kaptelinin 2011; Quistgaard and Kahr-Højland 2010; Barriault and Pearson 2010). Many important design considerations involve Human Computer Interaction aspects (Allen 2004; Ardito et al. 2012; Hornecker 2008; Reeves et al. 2005; Hall and Bannon 2005; St John et al. 2008; Lyons 2008; Vom Lehn, Heath, and Hindmarsh 2005; Crowley and Jacobs 2002; Dancu 2010; Crease 2006).

Contextual Model of Learning

"In science centers, the learning is much more multi-dimensional and any assessment of the learning experience needs to take into consideration the affective and emotional impacts, the very personal nature of each experience and the contextualized nature of that experience." (Barriault and Pearson 2010, 91)

In order to take context into account, research is often carried out in the natural setting in which the phenomenon being studied and related events occur (ethnography, case study or field study). When looking at learning from a socio-cultural point of view it is important to study the learning within the social context in which it occurs: in the case of a science centre this means observing the exhibit-related communication within groups and how the interaction with the exhibit and movement within the larger physical space encourages learning (Barriault and Pearson 2010).

The Contextual Model of Learning examines twelve key factors grouped into three contexts (Falk and Storksdieck 2005).

Personal context: 1. Visit motivation and expectations; 2. Prior knowledge; 3. Prior experiences; 4. Prior interests; 5. Choice and control;Sociocultural context: 6. Within group social mediation; 7. Mediation by others outside the immediate social group;

Physical context : 8. Advance organizers; 9. Orientation to the physical space; 10. Architecture and large-scale environment; 11. Design and exposure to exhibits and programs; 12. Subsequent reinforcing events and experiences outside the museum;

However Falk and his co-authors, while claiming that each of these factors influence learning, acknowledge that they have not been able to identify relative importance of the factors and as yet can only claim that complex combinations of factors influence learning.

Data Collection Methods

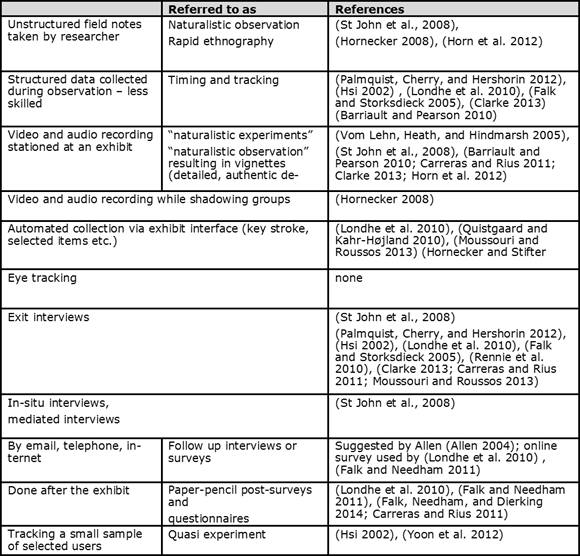

Observation is often used in Visitor Research, but observing groups is more difficult than observing an individual and hence video and audio recording (of conversations within the group) is often recommended as it allows for detailed analysis of the data (Barriault and Pearson 2010). Exit interviews are also frequently used. Both methods can provide both quantitative and qualitative data and both require skilled design of the forms or transcription sheets and data analysis.

The majority of published evaluation reports for interactive science exhibition use more than one means of data collection and most analysis is qualitative although a descriptive statistical analyses are also found occasionally (Table 1). The reason for the predominantly qualitative analysis is that these evaluations generally provide detailed descriptions of human behaviour rather than data collected from a large number of visitors (such as counting visitors or recording the frequency of generic actions). But this is not universal, the Black Holes Experiment Gallery Summative Evaluation (Londhe et al. 2010) does use descriptive statistics extensively.

Technology for Free-choice Use in CI

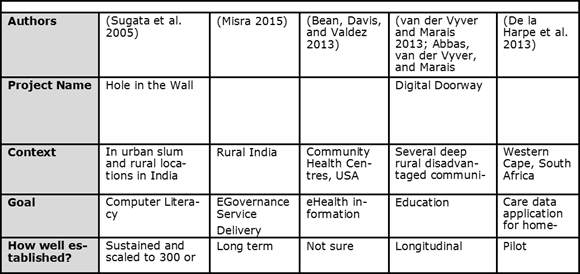

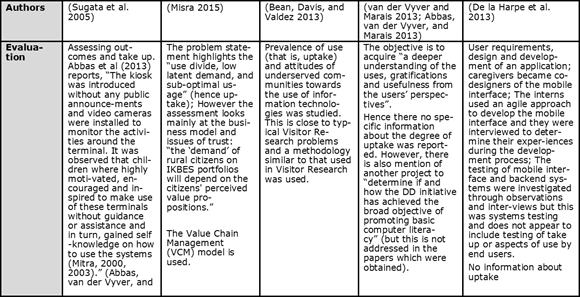

Tables 2 and 3 present five examples of ICT for free-choice use by disadvantaged communities that are not located in museums or science centres. All of these projects can be considered to be CI as well as ICT4D. The first four are in public spaces. The Hole in the Wall computers are often not in a building but under a roof or awning opening onto a sidewalk (Mitra 2000 photograph reproduced in Abbas, van der Vyver, and Marais 2013). The E-Governance Service Delivery kiosks discussed by Misra (2015) are located within a number of different types of settings (Common Service Centres (CSC) under NeGP, Krishi Vigyan Kendras (KVK), Village Resource Centres (VRC), Village Knowledge Centres (VKC)) all of which are dedicated to provide resources for accessing information but some of which are for profit. Although at the time that the paper was published these were kiosk-based and in centres, the intention is to migrate to mobile technologies (Misra 2015). The third example, is kiosks located in Community Health Centres which provide health related information (specifically focussing on mental health, HIV/AIDS and drug abuse) (Bean, Davis, and Valdez 2013). The Digital Doorway has also largely selected locations suited to its intended users (largely primary and secondary school learners) (Abbas, van der Vyver, and Marais 2013; van der Vyver and Marais 2013). Hence these digital kiosks are mainly installed at schools in deep rural disadvantaged communities in South Africa. The final example is not intended to be installed in a fixed location, it is a mobile care data application and this mobile character is essential to its usefulness (De la Harpe et al. 2013).

Some of these projects have been reported on extensively and have been evaluated in various ways. We have not done extensive reviews of them and hence the descriptions of evaluations described in Table 3 are simply illustrations of the types of evaluations done. The discussion after the presentation of findings from the research case studies will refer to this again.

FINDINGS

Background to the Research Example

This extensive study took place over eight months and many people were involved in collecting data including staff at the five science centres. As described in the Introduction, each centre has a unique environment and caters for different kinds of visitors some of whom are from surrounding communities and others who are tourists; the location of the centre has a large influence on who visits it and why. Hence, the needs of the visitors vary as was evident in the results from the study. It is for this reason that data from each centre was analysed and discussed separately in the final report.

The following are some of the questions that needed to be answered.

- Who interacts with the exhibits and who does not interact? (Demographic information)

- How much and what kind of interaction takes place? (Time, apparent interest, apparent understanding, sections attracting attention)

- How engaging and effective are the audio-visual components?

- How easy is the technology to use?

Research Methodology of the Research Example

Informed by the literature, a multi-methodological approach was used for the research described in this case study. The staff at all the science centres suggested that training in data collection would be useful for interns but that it was even more important that permanent staff members acquire this skill. They also thought that more senior staff should assist interns to collect data, particularly during the exit interviews and observation. Hence a two day workshop was held that was positively received and we believe this improved the quality of data collected in the field.

Quantitative and qualitative data were collected from: semi-structured interviews with staff members; observations, questionnaires, and semi-structured exit interviews with visitors. Data collection forms were designed, tested during a pilot study and a training workshop, and changed in response to proposals from the workshop. These were printed and were filled in manually when data was collected. Audio recordings using smart pens were supposed to support the written data but few useful recordings could be obtained. Video recording, particularly covert recording, has been found to be very useful in similar published studies but this option was not used, partly for operational reasons, such as cost and theft, and partly for ethical reasons as many visitors are children and explicit parental permission would be difficult to obtain.

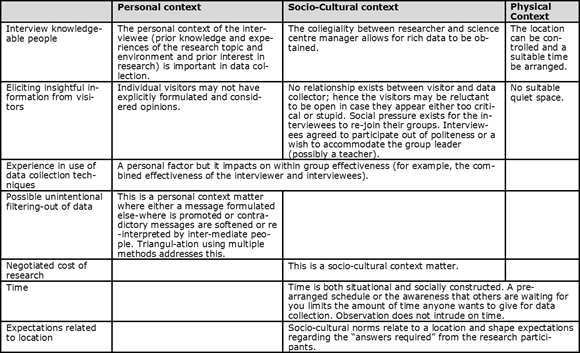

A framework from the literature (the Visitor Engagement Framework) was used for thematic analysis in the final report regarding the visitors' responses to the exhibit which was submitted to the client. However we will use the Contextual Model of Learning, an analytical theory for Visitor Research, in the discussion of the findings that follows. Since this paper's primary objective is to add to CI and ICT4D theories and associated methodology, the Contextual Model of Learning is proposed as a useful theoretical approach and this is illustrated by using it to gain insight into the practical challenges that arose related to the research design. Such lessons provide tangible and relevant information informed by current research about these methods. The discussion follows each finding (sometimes precedes a set of findings) and is written in italics. This is subsequently summarised in Table 4. However, a second aspect (how those contexts affect the visitors' responses to an exhibit) will be illustrated very briefly by some examples after that (Table 5).

Practical Lessons Learned from the Research Example

Interview Knowledgeable People

Visits to the centres and obtaining insights from people (the science centre staff) who are very knowledgeable about the context, early in the research process, were extremely useful. The information obtained helped us to make the research locally relevant to the various environments by asking the "right" questions in ways that were appropriate for the diverse visitors. This enriched our data beyond what could be obtained from visitors to the centre and broadened the scope of the research while still retaining its focus. These important early interviews also gave us an opportunity to tell the science centre staff what to expect from us and our research and to work out some of the logistics. The staff members are immersed in the physical and sociocultural context of the science centre and have already made their own meaning in terms of the use of an exhibit. Using them as colleagues and hence equal status participants in the research but also as insightful information sources is important.

Difficulty Eliciting Information From Visitors Beyond the Obvious

The literature (particularly the Framework for Evaluating Impacts of Informal Science Education Projects) advises that studies of this sort should be alert for unexpected responses, activity and interaction. The researchers were keen to uncover information that was new and tried to craft questions to make respondents think hoping that this would reveal this type of information, but many respondents simply left out questions that they thought were difficult. Hence the interviewees seemed reluctant or unable to reveal their personal contexts, such as prior knowledge, prior experience and prior interest that the interviews were attempting to uncover. Other personal factors are a lack of personal interest in the research project as there is no expectation of benefit. Time constraints, and an awareness that the rest of the group were waiting were socio-cultural context aspects that exacerbated the problem.

Experience in Use of Data Collection Techniques

The data collection skills needed should not be underestimated and unintended outcomes in the research usually relate to this and research planning to a greater extent than to the exhibit. For example, it is preferable to carry out interviews in a quiet place so that rich qualitative data can be obtained. Hence, centres which sent staff to the data collection workshop collected better data than the untrained contributors. Skilled and experienced data collectors, who had more time and a quieter environment, might have noticed and addressed some of the factors preventing interviewees from providing useful data and probed more deeply. This was discussed in the workshop and the suggestion was made that interviewers explain that the interviewee was also now "doing scientific research" through the data collection process.

The data collection was supposed to be supported by audio recordings using smart pens and training was provided but although the pens were received enthusiastically they were not used much. Either the user only said what she wrote (transcribed for us) or they were not used at all or the environment was so noisy that the best use that could be made of the audio is to provide evidence of the surrounding racket.

Experience helps interviewers in creating a bond between themselves and the interviewees and in arousing interest in the research. The personal context of the interviewer (skills and confidence, acquired through prior knowledge and experience in particular) result in a greater choice of interview techniques and control over the data collection. Therefore, the personal context of the interviewer is as important as that of the interviewee.

Possible Unintentional Filtering-out of Data

The choice of who should collect data is based on cost and convenience but influences the results and limits the amount of "hidden" data that is revealed as embedded staff might have preconceived ideas. This interpretation is based on the fact that there was a strong correspondence between what the science centre staff said in the initial interviews and the data collected at the corresponding centre and the fact that the same issues were not always duplicated at other centres. Alternatively this could mean that these individuals know and accurately report the relevant information in early interviews and that it did not change much between the time when the interviews were conducted and when most of the data was collected. This second "reading" of the results implies that the centres are very different and the lack of duplication of issues raised is to be expected. We have no way of determining which of these is true other using independent observers to triangulate the data. This is a 'personal context' linked to motivation and expectations issue but is extrapolated to groups of stakeholders (independent researchers, funders, staff at the centre).

Negotiated Cost of Data Collection

The budget is a big consideration for a funder, and when the research is in response to a tender this is a particularly sensitive issue. Hence prior to the Call for Proposals, issues of research methodology were already prescribed such as the collection of data by people already in loco and contracted. The independent researchers who were awarded the contract addressed the issues regarding the type of methodology, who would collect data and what planning and preparation was required immediately in their proposal. However they also needed to be prepared to negotiate regarding price and to add a data collection workshop at no cost in order to add additional ways of collecting data (observations in particular). Despite the fact that the sponsor fully supported the suggested compromise the outcomes were not perfect. The observations turned out to be very valuable as did the early interviews between researchers and science centre managers. This illustrates one aspect of how different stakeholder's mandates can affect research (and in particular contract research) (a socio-cultural issue).

Time as a Socio-cultural Context

The issue of scheduling proved to be a major factor. The reality of the wider socio-cultural context of Visitor Research, particularly where it takes place at a university or school or depends on school groups, means that scheduling has to fit with the real world circumstances of school holidays, and other events that require the data collectors' time. This need to coordinate, that is, plan and reach a mutually acceptable agreement with the science centres in terms of time is of course evident in all fieldwork unless the researcher is unaware of the negative effects of imposing a schedule on his "hosts". In this case only minimal data could be collected from one of the sites and the greatest difficulties in scheduling, despite serious efforts to make arrangements, were experienced in the two least advantaged sites.

The issue of conflicting interests regarding time was evident at a second level where a pre-agreed time could not easily be arranged, this was with the visitors to the centres. Since most visitors are in a group, stopping individuals to interview them or get them to fill in a questionnaire is intrusive, results in rushed, minimal or incomplete answers and a small sample. The use of less intrusive data collection methods, namely, observation, and access to the knowledge of staff accumulated informally over time is essential to compensate for this.

Expectations Related to Location

The practical issues and lessons learned related to data collection which are discussed above relate across all five science centres. However there were noticeable differences in sociocultural norms that were evident between communities - we will use one extreme example to illustrate this. The smallest centre was located in one classroom of a school in an impoverished section of the city. The researchers were greeted warmly and with great respect, they were unusual visitors in many respects, and it was considered to be an honour (with possible benefits in the longer term) that they were there. It was clear that the staff at the centre and the school children wanted to make a good impression and hence the staff and learners focussed on what they perceived as evaluation of their responses more than learning. The result was that much more effort was put into answering the questionnaires and interviews than, for example in a large centre in "the city". Children were on their knees with their ears to the audio speakers to hear exactly what the exhibit soundtrack "said" and to record it exactly. The small section of content was replayed repeatedly so that the "correct" answer could be given. It would have been totally misleading to combine the data from this setting with that of university students voluntarily visiting a science centre as a leisure activity and feeling that their role was to point out problems so that they could be fixed.

Summary of Findings

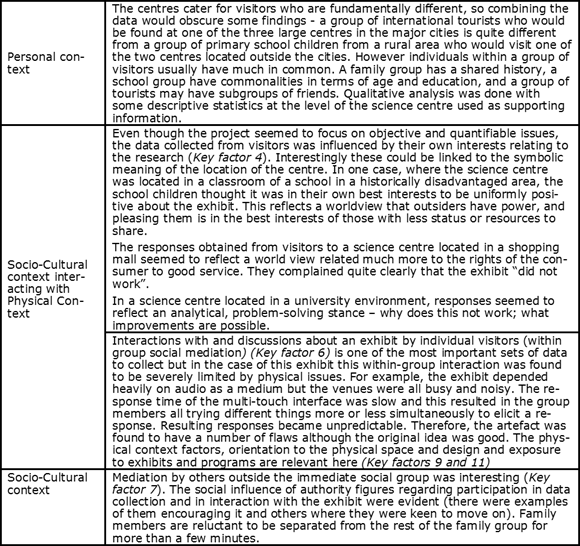

It is important to understand the differences between using the Contextual Model of Learning in order to look at the research process, and its more common use in Visitor Research as a device for organizing the complexities of learning within free-choice settings. Table 4 summarises the findings provided above and emphasises the roles of the three contexts of the Contextual Model of Learning (see the discussion in the Literature Review) in terms specifically of data collection. Table 5 looks at the roles of the three contexts of the Contextual Model of Learning in the analysis and hence to a certain extent in the results of the original research. The key factors indicated in Table 5 are listed in the discussion on the Contextual Model of Learning in the Literature Review.

DISCUSSION

The design issues referred to in Tables 4 and 5 are very practical and relate to the usefulness of the exhibit in a public space, with many groups of visitors and many distractions. However, lessons can be learned from them that relate more generally to CI projects, particularly where an artefact may be used in a public space, for example a mobile device app used while travelling on crowded public transport. These relate to ease of use of the artefact or system within a noisy and distracting environment, to the form of feedback (the effort required to read or hear, the chunk size, multiple channels and repeatability providing redundancy to compensate for missed words), to issues of time and to issues of expectations (related to how to serve interests related to context). Good ideas do not necessarily translate into useful artefacts and genuine and detailed insight into the variety of locations of use and the diversity of users is necessary. In addition, an iterative process, as recommended in design science research, is needed for the design of digital products (exhibits, apps and tools of various sorts) for use by the public as predicting usage patterns and barriers to use by diverse end users is often found to be unreliable. The location of use of mobile devices is even less predictable than is the case for science centres and the number of distractions is likely to be even greater.Although the design of an artefact is generally not endlessly customisable, the end users are often described as though they fall into a small number of homogeneous categories. Some additional descriptors that make a big difference may be missed. This relates to both personal and socio-cultural context.

Some very interesting examples of technologies or demonstrations of scientific principles are big in size, elaborate, expensive, not portable or will only be used briefly (for example a digitally-augmented exhibition on the history of modern media (Hornecker and Stifter 2006). These cannot feasibly be duplicated and hence are ideal as interactive exhibits for an science centre and would be evaluated there. However, a science centre could also be used as a temporary location in which to evaluate technology-enhanced artefacts intended to be rolled out to communities at any public space (schools, clinics, shopping centres, or multi-purpose community centres). The first four examples described in the section: Technology for free-choice use in CI and Tables 2 and 3 could have had preliminary evaluations in a science centre using methodologies suitable for Visitor Research before they were installed in their intended locations. A science centre could even be used as a place to evaluate interaction with, or use by members of a community, of technology that might not eventually be located in a public space as long as there is some way of observing or otherwise collecting data such as logging interactions via a mobile phone. Examples are the artefacts described by Misra (2015) and De la Harpe (2013).

However, the difference in temporary and intended subsequent physical context must be taken into account when doing this evaluation. As was found in the analysis in Table 5, the science centre environment (or any public space) usually has more noise and human activity than a private location (private office, home) and this is distracting and the design of the artefact should take this into account. Understanding the impact of the physical context is important to artefact design, for example a mobile device app used while travelling on crowded public transport.

There are a number of advantages of doing preliminary evaluation of a digital artefact in a science centre. Firstly, a science centre has many visitors and it is an ideal place to stimulate, inspire or spark an idea during a brief contact with a topic. It has large numbers of diverse visitors who are open (even intending) to interact with exhibits with the purpose of learning. However, just as in communities, there are distractions, including alternative activities and the visitors have a choice regarding which activity to participate in. Hence the visitors are a convenient sample (possibly in some cases a representative sample) within an environment that may be quite similar to the eventual environment of use.

Secondly, this is an ideal environment to study group interactions with an exhibit involving joint discovery and shared explanations. This links to what is referred to as a minimally invasive educational environment (Sugata et al. 2005) where learning is largely unassisted or unsupervised and there is group self-instruction or peer-assisted learning. Hence it is an ideal space in which to look at multiuser interfaces such as multi-touch screens (Hornecker 2008; Lyons 2008). As this is an informal learning environment, it is an ideal place to study gamification (in particular multiplayer educational games).

As seen in our research, the environment (community or socio-cultural context as well as physical environment) is important; there may be several science centres in a country and comparative case study research where different located communities and environments are involved are of interest to CI.

Furthermore, a number of data collection methodologies can quite easily be implemented in this environment. The literature supports this idea but observation (including recorded video) was most valuable in our study and this is supported by literature (for example Barriault and Pearson 2010). Findings regarding data collection are summarized in Table 5. In a public space such as a science centre, observation can easily be covert or unobtrusive; informed consent can be obtained by notices in the science centre saying that there are CCTV cameras used both for security and research, and the images will not be published or used for advertising so there are not expected to be ethical issues (research ethics). Audio recordings of private conversations might need to be handled separately if you are interested in shared explanations. It is necessary to provide incentives in order to persuade visitors to spend time and possibly reveal information that they consider private. Bean et al. (2013) had a similar experience in their study of kiosks located in Community Health Centres.

Finally, the existing infrastructure and established patterns of use by visitors provide a stable research support structure; the science centre has existing security and other infrastructure.

Disadvantages, as noted by the literature are that the visitors are typically only going to interact briefly with any exhibit (Hornecker 2008; Hornecker and Stifter 2006; Horn et al. 2012) and, although certain families or individuals might visit the science centre regularly it is difficult to track them and they are probably a small minority of visitors. Issues regarding time constraints are described in Table 3. A science centre is not the place to track on-going use of technology by particular users. Another disadvantage noted earlier and resulting from the brief interactions and informal learning is that it is difficult to identify actual learning gains and to quantify impact of an exhibit or artefact .

Research methodology and theory from Visitor Research can be applied within a science centre, but even if the research is not intended to take place there, a science centre has resources such as in-house experts with extensive design experience and knowledge of the visitors and these experts can be valuable contributors to artefact design for CI design science research. In our experience the staff at the science centre were enthusiastic supporters of evaluation projects and new (cheap) exhibits.

A question that is less easy to answer is whether visitor research methodology can be transferred to CI research outside the science centre. As illustrated in the examples in Tables 2 and 3, any ICT intended for community use in public venues is similar to ICT use within the specific public space of a science centre. This applies particularly to field studies relating to evaluation of the human computer interface, take up and actual use of the ICT (including usability and user experience).

Specifically: What lessons can be learned from Visitor Research methodologies that can be applied to CI research methodology outside science centres?

- A science centre can be used as a supporting research resource

- Physical, personal and socio-cultural contexts and the associated key factors should be taken into account as in the Contextual Model of Learning

- Observation is a primary way of collecting credible data where group interactions, peer-assisted learning, unsupervised engagements with the technology, minimally invasive education and free-choice and self-directed use are involved.

CONCLUSION

ICT4D and CI research can learn from methodologies used to study aspects of learning when visitors interact with exhibits at science centres since ICT4D and CI projects also intend to build capacity within a community. Hence these fields share a developmental goal, namely to create informal and experiential learning opportunities which may lead to employment opportunities. Visitors to a science centre and community members using technology at a telecentre or using their own mobile phones are active participants whose observable actions are clues to their meaning-making while they engage with the activity. As one rather different example, M-Learning projects are often presented as ICT4D projects and these have very strong similarities with Visitor Research in terms of self-directed education. Models from Visitor Research could therefore be used and possibly be extended to provide insights into use of technology for profitable outcomes. This paper has illustrated this by describing some challenges regarding methodology in a particular project, evaluation of an ICT-enabled exhibit in a number of science centres.

Just as the physical context, socio-cultural context and associated personal visitor identity and context are important in learning, these can be seen to be important in the research process as well. An exhibit that works in one science centre may not, for a variety of reasons, work at another; a research effort in one context may need to be adapted for another. Thus the Contextual Model of Learning can be applied to the research learning as in this paper (although admittedly this was not the intention of the originators and hence could be seen as an extension of the model) as well as to the visitor's learning which is how we propose using it in assessing developmental gains in CI and ICT4D. The unit of analysis, however, clearly differs when using this model in its original and in this extended way.

The case presented in this paper is used to illustrate ideas and was not used to assess the learning effectiveness of the exhibit. However throughout that analysis the noise, distractions and design of the exhibit formed the physical context and were seen to limit the visitors' ability to engage with the exhibit and hence any learning; the socio-cultural differences at the higher level of the community and within groups were evident in the ways in which individual members responded to (and reported their) interaction with the exhibit. Personal context was not successfully used to assess learning gains largely because the other two contexts formed such serious barriers that little individual interaction occurred.